Deploy a GKE Cluster with Portworx

Let's deploy a nice GKE Cluster with a customized Portworx deployment using security capabilities and encrypted volumes

Get your own GCP account, download gcloud and authenticate on your laptop.

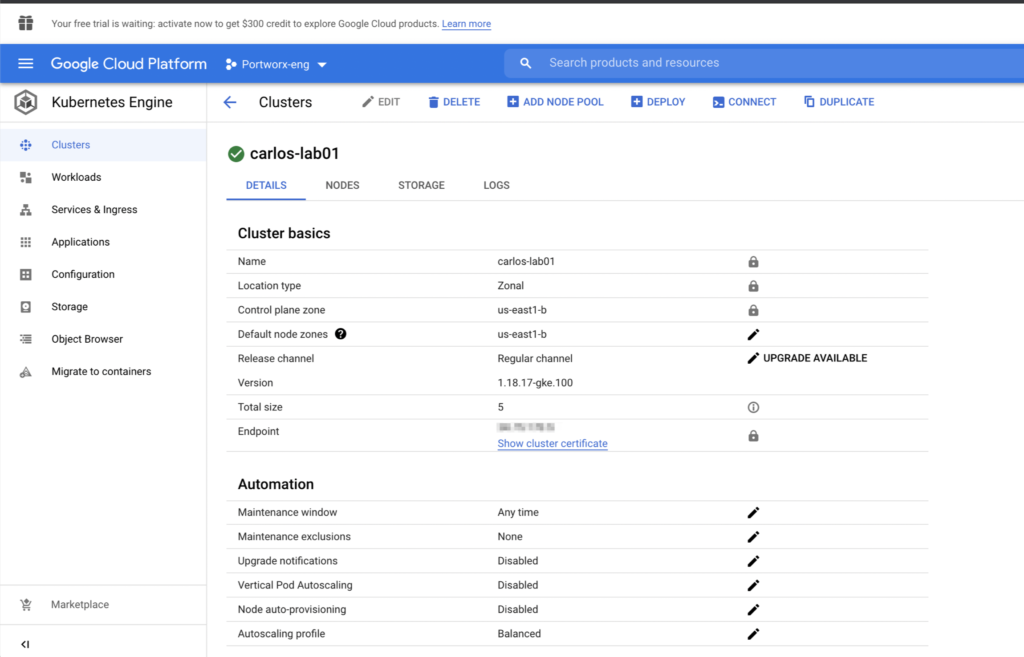

gcloud container clusters create carlos-lab01 \

--zone us-east1-b \

--disk-type=pd-ssd \

--disk-size=50GB \

--labels=portworx=gke \

--machine-type=n1-highcpu-8 \

--num-nodes=5 \

--image-type ubuntu \

--scopes compute-rw,storage-ro,cloud-platform \

--enable-autoscaling --max-nodes=5 --min-nodes=5

gcloud container clusters get-credentials carlos-lab01 --zone us-east1-b --project <your-project>

gcloud services enable compute.googleapis.comWail until having your cluster available

Then you can install Portworx using the operator.

operator.yaml

# SOURCE: https://install.portworx.com/?comp=pxoperator

apiVersion: v1

kind: ServiceAccount

metadata:

name: portworx-operator

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: portworx-operator

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: portworx-operator

subjects:

- kind: ServiceAccount

name: portworx-operator

namespace: kube-system

roleRef:

kind: ClusterRole

name: portworx-operator

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: portworx-operator

namespace: kube-system

spec:

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

replicas: 1

selector:

matchLabels:

name: portworx-operator

template:

metadata:

labels:

name: portworx-operator

spec:

containers:

- name: portworx-operator

imagePullPolicy: Always

image: portworx/px-operator:1.5.0

command:

- /operator

- --verbose

- --driver=portworx

- --leader-elect=true

env:

- name: OPERATOR_NAME

value: portworx-operator

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "name"

operator: In

values:

- portworx-operator

topologyKey: "kubernetes.io/hostname"

serviceAccountName: portworx-operatorpx-enterprisecluster.yaml

# SOURCE: https://install.portworx.com/?operator=true&mc=false&kbver=1.20.8&b=true&kd=type%3Dpd-standard%2Csize%3D150&csicd=true&mz=5&s=%22type%3Dpd-ssd%2Csize%3D150%22&j=auto&c=px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74&gke=true&stork=true&csi=true&mon=true&st=k8s&promop=true

kind: StorageCluster

apiVersion: core.libopenstorage.org/v1

metadata:

name: px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74

namespace: kube-system

annotations:

portworx.io/install-source: "https://install.portworx.com/?operator=true&mc=false&kbver=1.20.8&b=true&kd=type%3Dpd-standard%2Csize%3D150&csicd=true&mz=5&s=%22type%3Dpd-ssd%2Csize%3D150%22&j=auto&c=px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74&gke=true&stork=true&csi=true&mon=true&st=k8s&promop=true"

portworx.io/is-gke: "true"

spec:

image: portworx/oci-monitor:2.8.0

imagePullPolicy: Always

kvdb:

internal: true

cloudStorage:

deviceSpecs:

- type=pd-ssd,size=200

journalDeviceSpec: auto

kvdbDeviceSpec: type=pd-standard,size=50

maxStorageNodesPerZone: 5

secretsProvider: k8s

stork:

enabled: true

args:

webhook-controller: "false"

autopilot:

enabled: true

providers:

- name: default

type: prometheus

params:

url: http://prometheus:9090

monitoring:

telemetry:

enabled: true

prometheus:

enabled: true

exportMetrics: true

featureGates:

CSI: "true"kubectl create clusterrolebinding myname-cluster-admin-binding \

--clusterrole=cluster-admin --user=`gcloud info --format='value(config.account)'`

kubectl apply -f operator.yaml

kubectl apply -f px-enterprisecluster.yamlkubectl get all -n kube-system NAME READY STATUS RESTARTS AGE pod/autopilot-7b4f7f58f4-kchs4 1/1 Running 0 34m pod/event-exporter-gke-67986489c8-prn9p 2/2 Running 0 41m pod/fluentbit-gke-bn5nm 2/2 Running 0 41m pod/fluentbit-gke-f7k2j 2/2 Running 0 41m pod/fluentbit-gke-h672g 2/2 Running 0 41m pod/fluentbit-gke-n9664 2/2 Running 0 41m pod/fluentbit-gke-xjttt 2/2 Running 0 41m pod/gke-metrics-agent-d64hw 1/1 Running 0 41m pod/gke-metrics-agent-fhw8l 1/1 Running 0 41m pod/gke-metrics-agent-gsfvk 1/1 Running 0 41m pod/gke-metrics-agent-mqm64 1/1 Running 0 41m pod/gke-metrics-agent-wwjvx 1/1 Running 0 41m pod/kube-dns-6c7b8dc9f9-q8v75 4/4 Running 0 41m pod/kube-dns-6c7b8dc9f9-wqthz 4/4 Running 0 41m pod/kube-dns-autoscaler-844c9d9448-4fx8f 1/1 Running 0 41m pod/kube-proxy-gke-carlos-lab01-default-pool-a6362dc8-11k6 1/1 Running 0 41m pod/kube-proxy-gke-carlos-lab01-default-pool-a6362dc8-5lgd 1/1 Running 0 16m pod/kube-proxy-gke-carlos-lab01-default-pool-a6362dc8-b73f 1/1 Running 0 41m pod/kube-proxy-gke-carlos-lab01-default-pool-a6362dc8-n5fl 1/1 Running 0 41m pod/kube-proxy-gke-carlos-lab01-default-pool-a6362dc8-v02w 1/1 Running 0 41m pod/l7-default-backend-56cb9644f6-xfd65 1/1 Running 0 41m pod/metrics-server-v0.3.6-9c5bbf784-9z6sm 2/2 Running 0 40m pod/pdcsi-node-4wprs 2/2 Running 0 41m pod/pdcsi-node-685ht 2/2 Running 0 41m pod/pdcsi-node-g42tb 2/2 Running 0 41m pod/pdcsi-node-ln4tw 2/2 Running 0 41m pod/pdcsi-node-ncqhl 2/2 Running 0 41m pod/portworx-api-76kcn 1/1 Running 0 34m pod/portworx-api-887bl 1/1 Running 0 34m pod/portworx-api-br4f2 1/1 Running 0 34m pod/portworx-api-hzfsn 1/1 Running 0 34m pod/portworx-api-zcd4m 1/1 Running 0 34m pod/portworx-kvdb-8ls5k 1/1 Running 0 71s pod/portworx-kvdb-c797z 1/1 Running 0 13m pod/portworx-kvdb-gmxpv 1/1 Running 0 13m pod/portworx-operator-bfc87df78-schcz 1/1 Running 0 36m pod/portworx-pvc-controller-696959f9bc-4kj5v 1/1 Running 0 34m pod/portworx-pvc-controller-696959f9bc-gn2tw 1/1 Running 0 34m pod/portworx-pvc-controller-696959f9bc-jmsxn 1/1 Running 0 34m pod/prometheus-px-prometheus-0 3/3 Running 1 33m pod/px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74-9pvws 3/3 Running 0 74s pod/px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74-hnzs8 3/3 Running 0 88s pod/px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74-j7vrc 3/3 Running 1 34m pod/px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74-sgm67 3/3 Running 0 113s pod/px-cluster-cb94f533-5006-4299-b6b4-ad8e09690b74-xvzxn 3/3 Running 0 13m pod/px-csi-ext-5686675c58-5qfzq 3/3 Running 0 34m pod/px-csi-ext-5686675c58-dbmmb 3/3 Running 0 34m pod/px-csi-ext-5686675c58-vss9p 3/3 Running 0 34m pod/px-prometheus-operator-8c88487bc-jv9fd 1/1 Running 0 34m pod/stackdriver-metadata-agent-cluster-level-9548fb7d6-vm552 2/2 Running 0 41m pod/stork-75dd8b896-g4qqj 1/1 Running 0 34m pod/stork-75dd8b896-mb2xt 1/1 Running 0 34m pod/stork-75dd8b896-zjlwm 1/1 Running 0 34m pod/stork-scheduler-574757dd8d-866bv 1/1 Running 0 34m pod/stork-scheduler-574757dd8d-jhx7w 1/1 Running 0 34m pod/stork-scheduler-574757dd8d-mhg99 1/1 Running 0 34m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/default-http-backend NodePort 10.3.241.138 <none> 80:31243/TCP 41m service/kube-dns ClusterIP 10.3.240.10 <none> 53/UDP,53/TCP 41m service/kubelet ClusterIP None <none> 10250/TCP 33m service/metrics-server ClusterIP 10.3.248.53 <none> 443/TCP 41m service/portworx-api ClusterIP 10.3.242.28 <none> 9001/TCP,9020/TCP,9021/TCP 34m service/portworx-operator-metrics ClusterIP 10.3.247.121 <none> 8999/TCP 35m service/portworx-service ClusterIP 10.3.245.123 <none> 9001/TCP,9019/TCP,9020/TCP,9021/TCP 34m service/prometheus-operated ClusterIP None <none> 9090/TCP 33m service/px-csi-service ClusterIP None <none> <none> 34m service/px-prometheus ClusterIP 10.3.245.244 <none> 9090/TCP 34m service/stork-service ClusterIP 10.3.242.128 <none> 8099/TCP,443/TCP 34m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/fluentbit-gke 5 5 5 5 5 kubernetes.io/os=linux 41m daemonset.apps/gke-metrics-agent 5 5 5 5 5 kubernetes.io/os=linux 41m daemonset.apps/gke-metrics-agent-windows 0 0 0 0 0 kubernetes.io/os=windows 41m daemonset.apps/kube-proxy 0 0 0 0 0 kubernetes.io/os=linux,node.kubernetes.io/kube-proxy-ds-ready=true 41m daemonset.apps/metadata-proxy-v0.1 0 0 0 0 0 cloud.google.com/metadata-proxy-ready=true,kubernetes.io/os=linux 41m daemonset.apps/nvidia-gpu-device-plugin 0 0 0 0 0 <none> 41m daemonset.apps/pdcsi-node 5 5 5 5 5 kubernetes.io/os=linux 41m daemonset.apps/pdcsi-node-windows 0 0 0 0 0 kubernetes.io/os=windows 41m daemonset.apps/portworx-api 5 5 5 5 5 <none> 34m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/autopilot 1/1 1 1 34m deployment.apps/event-exporter-gke 1/1 1 1 41m deployment.apps/kube-dns 2/2 2 2 41m deployment.apps/kube-dns-autoscaler 1/1 1 1 41m deployment.apps/l7-default-backend 1/1 1 1 41m deployment.apps/metrics-server-v0.3.6 1/1 1 1 41m deployment.apps/portworx-operator 1/1 1 1 36m deployment.apps/portworx-pvc-controller 3/3 3 3 34m deployment.apps/px-csi-ext 3/3 3 3 34m deployment.apps/px-prometheus-operator 1/1 1 1 34m deployment.apps/stackdriver-metadata-agent-cluster-level 1/1 1 1 41m deployment.apps/stork 3/3 3 3 34m deployment.apps/stork-scheduler 3/3 3 3 34m NAME DESIRED CURRENT READY AGE replicaset.apps/autopilot-7b4f7f58f4 1 1 1 34m replicaset.apps/event-exporter-gke-67986489c8 1 1 1 41m replicaset.apps/kube-dns-6c7b8dc9f9 2 2 2 41m replicaset.apps/kube-dns-autoscaler-844c9d9448 1 1 1 41m replicaset.apps/l7-default-backend-56cb9644f6 1 1 1 41m replicaset.apps/metrics-server-v0.3.6-57bc866888 0 0 0 41m replicaset.apps/metrics-server-v0.3.6-886d66856 0 0 0 41m replicaset.apps/metrics-server-v0.3.6-9c5bbf784 1 1 1 40m replicaset.apps/portworx-operator-bfc87df78 1 1 1 36m replicaset.apps/portworx-pvc-controller-696959f9bc 3 3 3 34m replicaset.apps/px-csi-ext-5686675c58 3 3 3 34m replicaset.apps/px-prometheus-operator-8c88487bc 1 1 1 34m replicaset.apps/stackdriver-metadata-agent-cluster-level-546484c84b 0 0 0 41m replicaset.apps/stackdriver-metadata-agent-cluster-level-9548fb7d6 1 1 1 41m replicaset.apps/stork-75dd8b896 3 3 3 34m replicaset.apps/stork-scheduler-574757dd8d 3 3 3 34m NAME READY AGE statefulset.apps/prometheus-px-prometheus 1/1 33m

You can try to test the features of Portworx deploying one Statefulset application

kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE premium-rwo pd.csi.storage.gke.io Delete WaitForFirstConsumer true 29h px-db kubernetes.io/portworx-volume Delete Immediate true 29h px-db-cloud-snapshot kubernetes.io/portworx-volume Delete Immediate true 29h px-db-cloud-snapshot-encrypted kubernetes.io/portworx-volume Delete Immediate true 29h px-db-encrypted kubernetes.io/portworx-volume Delete Immediate true 29h px-db-local-snapshot kubernetes.io/portworx-volume Delete Immediate true 29h px-db-local-snapshot-encrypted kubernetes.io/portworx-volume Delete Immediate true 29h px-replicated kubernetes.io/portworx-volume Delete Immediate true 29h px-replicated-encrypted kubernetes.io/portworx-volume Delete Immediate true 29h px-secure-sc kubernetes.io/portworx-volume Delete Immediate false 28h standard (default) kubernetes.io/gce-pd Delete Immediate true 29h standard-rwo pd.csi.storage.gke.io Delete WaitForFirstConsumer true 29h stork-snapshot-sc stork-snapshot Delete Immediate true 37m

Alright then, we need to create a Cluster Wide secret key to handle our encrypted StorageClasses

YOUR_SECRET_KEY=this-is-gonna-be-your-secret-key kubectl -n kube-system create secret generic px-vol-encryption \ --from-literal=cluster-wide-secret-key=$YOUR_SECRET_KEY

And apply this secret to Portworx

PX_POD=$(kubectl get pods -l name=portworx -n kube-system -o jsonpath='{.items[0].metadata.name}')

kubectl exec $PX_POD -n kube-system -- /opt/pwx/bin/pxctl secrets set-cluster-key \

--secret cluster-wide-secret-keyOnce having your cluster wide secret in place, you can enable the cluster security on your storagecluster object, you can achieve this by editing the storagecluster object:

kubectl edit storagecluster -n kube-system

...

spec:

security:

enabled: trueAnd wait for the PX pods to be redeployed. To get access into your PX Cluster after this, you have to get the tokens on your pods.

PORTWORX_ADMIN_TOKEN=$(kubectl -n kube-system get secret px-admin-token -o json \

| jq -r '.data."auth-token"' \

| base64 -d)

PX_POD=$(kubectl get pods -l name=portworx -n kube-system -o jsonpath='{.items[0].metadata.name}')

kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl context create admin --token=$PORTWORX_ADMIN_TOKEN

PX_POD=$(kubectl get pods -l name=portworx -n kube-system -o jsonpath='{.items[1].metadata.name}')

kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl context create admin --token=$PORTWORX_ADMIN_TOKEN

PX_POD=$(kubectl get pods -l name=portworx -n kube-system -o jsonpath='{.items[2].metadata.name}')

kubectl exec -it $PX_POD -n kube-system -- /opt/pwx/bin/pxctl context create admin --token=$PORTWORX_ADMIN_TOKEN

kubectl exec $PX_POD -n kube-system -- /opt/pwx/bin/pxctl secrets k8s loginTest the cluster with a StatefulSet

kubectl create namespace cassandra

Label three of your nodes with the label app=cassandra because this StatefulSet uses this label as node affinity policy.

kubectl label nodes <node01> <node02> <node03> app=cassandra

cassandra.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: cassandra

namespace: cassandra

labels:

app: cassandra

spec:

serviceName: cassandra

replicas: 3

selector:

matchLabels:

app: cassandra

template:

metadata:

labels:

app: cassandra

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: app

operator: In

values:

- cassandra

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- cassandra

topologyKey: kubernetes.io/hostname

terminationGracePeriodSeconds: 1800

containers:

- name: cassandra

image: cassandra:3.11

imagePullPolicy: Always

ports:

- containerPort: 7000

name: intra-node

- containerPort: 7001

name: tls-intra-node

- containerPort: 7199

name: jmx

- containerPort: 9042

name: cql

resources:

limits:

cpu: "500m"

memory: 1Gi

requests:

cpu: "500m"

memory: 1Gi

securityContext:

capabilities:

add:

- IPC_LOCK

lifecycle:

preStop:

exec:

command:

- /bin/sh

- -c

- nodetool drain

env:

- name: MAX_HEAP_SIZE

value: 512M

- name: HEAP_NEWSIZE

value: 100M

- name: CASSANDRA_SEEDS

value: "cassandra-0.cassandra.cassandra.svc.cluster.local"

- name: CASSANDRA_CLUSTER_NAME

value: "K8Demo"

- name: CASSANDRA_DC

value: "DC1-K8Demo"

- name: CASSANDRA_RACK

value: "Rack1-K8Demo"

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

readinessProbe:

tcpSocket:

port: 9042

initialDelaySeconds: 30

timeoutSeconds: 7

volumeMounts:

- name: cassandra-data

mountPath: /var/lib/cassandra

volumeClaimTemplates:

- metadata:

name: cassandra-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: px-db-encrypted

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: Service

metadata:

name: cassandra

namespace: cassandra

spec:

clusterIP: None

selector:

app: cassandra

ports:

- protocol: TCP

name: port9042k8s

port: 9042

targetPort: 9042Apply this file

kubectl apply -f cassandra.yaml

kubectl get pvc -n cassandra NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE cassandra-data-cassandra-0 Bound pvc-81e11ede-e78a-4fd5-ae64-1ca451d8c8f9 2Gi RWO px-db-encrypted 116m cassandra-data-cassandra-1 Bound pvc-a83b23ee-1426-4b78-ae29-f6a562701e68 2Gi RWO px-db-encrypted 113m cassandra-data-cassandra-2 Bound pvc-326a2419-cf50-41d3-93d0-63dbecffbcdd 2Gi RWO px-db-encrypted 111m