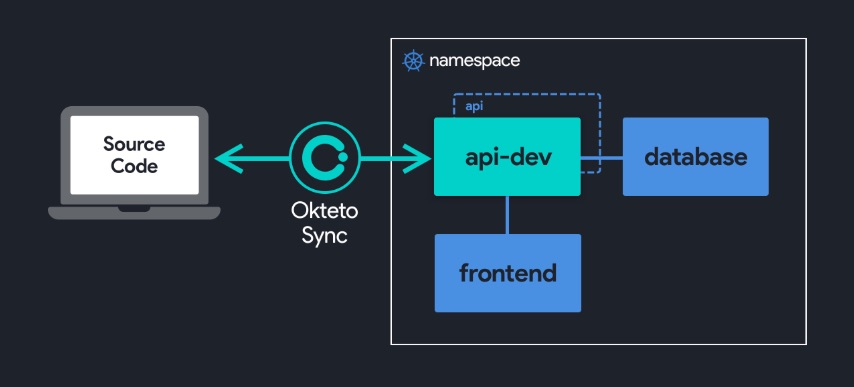

Image Policy Webhooks on Kubernetes (image scanner admission controller)

Adding Trivy Scanner as custom Admission Controller We will include an Image Policy Webhook on our kubeadm Kubernetes cluster in order to enhance its security, not allowing containers with more than 3 CRITICAL vulnerabilities from getting scheduled on our cluster. To accomplish this, the first step involves deploying a Scanner. In this instance, I have

Read More »