Deploy an Elasticsearch cluster for Kubernetes (ECK) on Google Compute Platform (GCP on GKE) with Terraform – Part I

This will be a very technical post but I think that is gonna be also quite interesting if you are working with cloud technologies.

Elasticsearch is a pretty nice technology widely used on big data stuff, analysis and so on. However, this tool is heavy and little bit difficult to deploy and maintain on healthy status.

I'm working a lot with Google Compute Platform (GCP) that's why I decided to include this part as well.

First things first

If you don't have a GCP account, is pretty straightforward to get one, even with some free usage, Google will give you 300 dollars to spend on it... by previous registration with your credit card 😉 go ahead and do it: https://console.cloud.google.com

Also download the gcloud CLI: https://cloud.google.com/sdk/docs/install

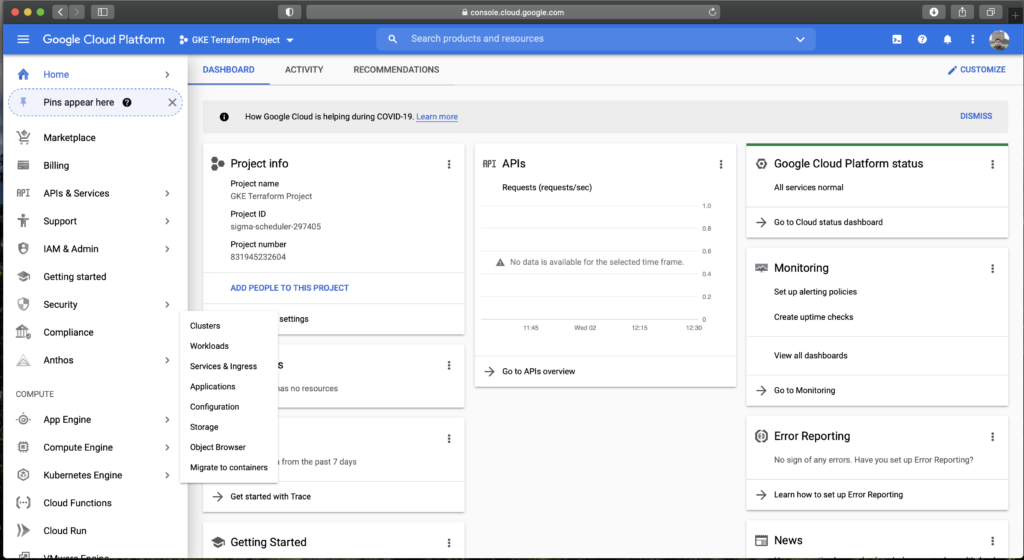

We will be using the project called GKE Terraform project as you can check below:

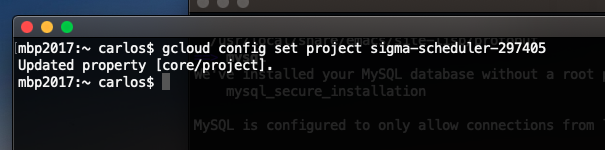

Get access to your gcloud project on the CLI and perform the browser steps needed to achieve it:

$ gcloud auth loginGet access to your project:

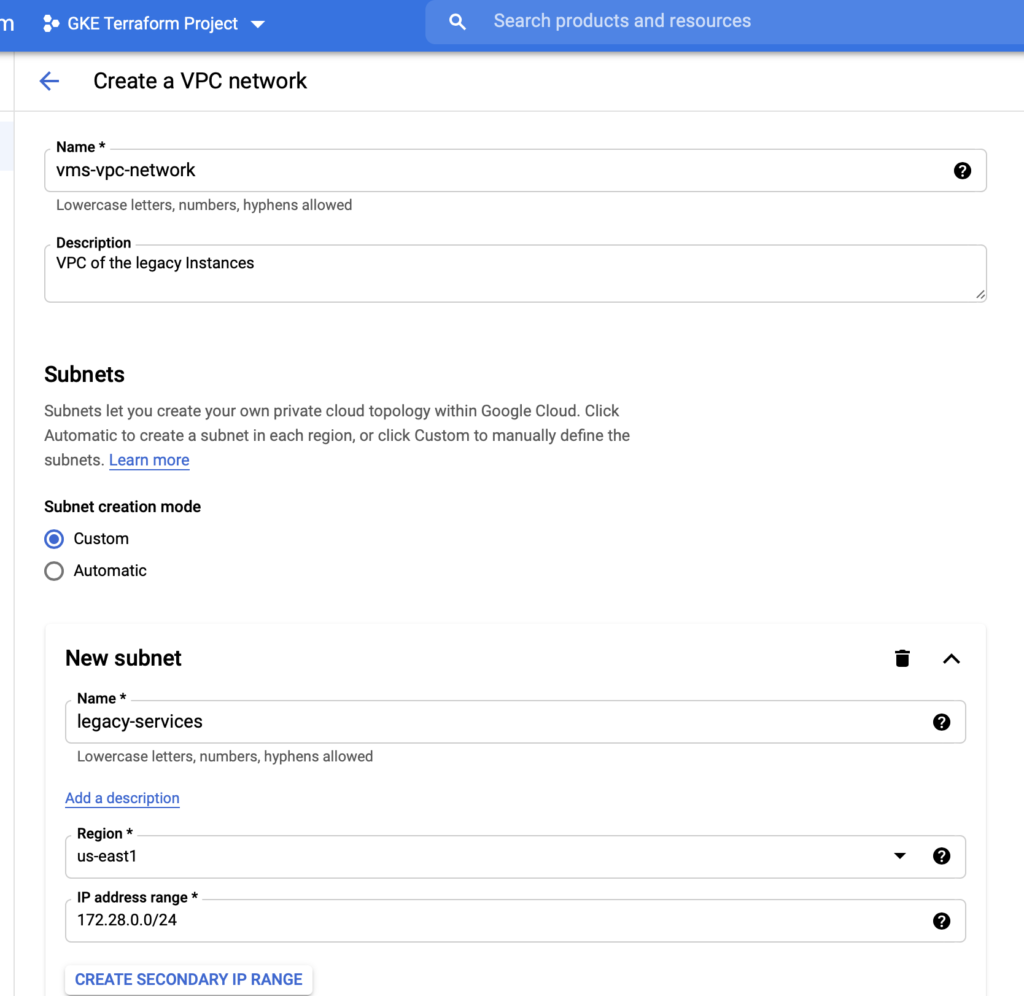

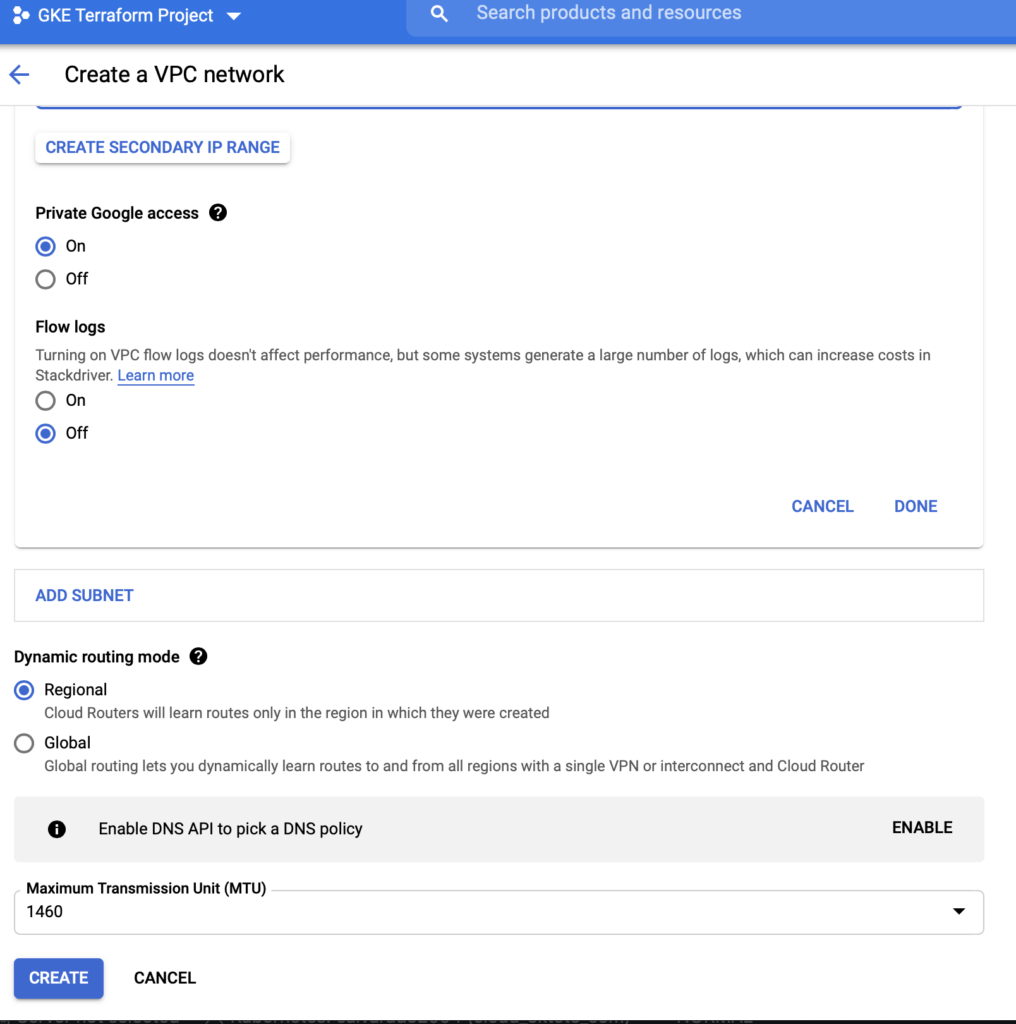

Let's create an empty VPC to simulate one environment with previous stuff deployed on it, like other instances and so on.

Well, at this point we have the very basic infrastructure to start using Terraform.

Infrastructure as Code, what does that mean?

Terraform is the leading tool to deploy infrastructure on this way, you can define a very complex set of infrastructure with code functions and treating them like objects and variables.

The GKE Terraform project is available here:

https://github.com/calvarado2004/terraform-gke

Please note that the size of the nodes is huge, you can go ahead and delete some of those pools of nodes and customize the CPU's and memory according to your needs and budget, I will do that, of course. You can check here another branch with smaller nodes: https://github.com/calvarado2004/terraform-gke/tree/resize-to-small

ECK can be deployed on a single node, but the minimal enterprise configuration should have:

- One Kibana node

- One Coordinator node

- One Master node

- Two Data nodes

This deployment is creating a pool of nodes for each type of node, in order to enable the autoresizing on further moments of the infrastructure lifecycle. That could give you an idea of the complexity that you can handle easily with Terraform.

Kubernetes have two internal layers of networking. We will be using the following three CIDRs:

- 170.35.0.0/24 for our GCP VPC, the most external face.

- 10.99.240.0/20 for our Kubernetes services.

- 10.96.0.0/14 for our Kubernetes Pods.

You can install Terraform if you have Ubuntu using this way:

$ curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add -

$ sudo apt-add-repository "deb [arch=amd64] https://apt.releases.hashicorp.com $(lsb_release -cs) main"

$ sudo apt-get update && sudo apt-get install terraformOtherwise, check how to install it on your machine:

https://www.terraform.io/downloads.html

This the content of the file gke.tf

variable "gke_username" {

default = ""

description = "gke username"

}

variable "gke_password" {

default = ""

description = "gke password"

}

variable "cluster_name" {

default = "gke-cluster"

description = "cluster name"

}

variable "zone" {

default = "us-east1-b"

description = "cluster zone"

}

#Your pods will have an IP address from this CIDR

variable "cluster_ipv4_cidr" {

default = "10.96.0.0/14"

description = "internal cidr for pods"

}

#Your Kubernetes services will have an IP from this range

variable "services_ipv4_cidr_block" {

default = "10.99.240.0/20"

description = "nternal range for the kubernetes services"

}

# GKE cluster

resource "google_container_cluster" "primary" {

name = var.cluster_name

location = var.zone

remove_default_node_pool = true

initial_node_count = 1

network = google_compute_network.vpc-gke.name

subnetwork = google_compute_subnetwork.subnet.name

cluster_ipv4_cidr = var.cluster_ipv4_cidr

services_ipv4_cidr_block = var.services_ipv4_cidr_block

min_master_version = "1.17.13-gke.2001"

master_auth {

username = var.gke_username

password = var.gke_password

client_certificate_config {

issue_client_certificate = false

}

}

cluster_autoscaling {

enabled = false

}

}

# Separately Managed Master Pool

resource "google_container_node_pool" "master-pool" {

name = "master-pool"

location = var.zone

cluster = google_container_cluster.primary.name

node_count = 1

autoscaling {

min_node_count = 1

max_node_count = 2

}

management {

auto_repair = true

auto_upgrade = false

}

node_config {

oauth_scopes = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/devstorage.read_only",

]

labels = {

es_type = "master_nodes"

}

# 6 CPUs, 12GB of RAM

preemptible = false

image_type = "ubuntu_containerd"

machine_type = "custom-6-12288"

local_ssd_count = 0

disk_size_gb = 50

disk_type = "pd-standard"

tags = ["gke-node", "${var.cluster_name}-master"]

metadata = {

disable-legacy-endpoints = "true"

}

}

}

# Separately Managed Data Pool

resource "google_container_node_pool" "data-pool" {

name = "data-pool"

location = var.zone

cluster = google_container_cluster.primary.name

node_count = 2

autoscaling {

min_node_count = 2

max_node_count = 4

}

management {

auto_repair = true

auto_upgrade = false

}

node_config {

oauth_scopes = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/devstorage.read_only",

]

labels = {

es_type = "data_nodes"

}

# 14 CPUs, 41GB of RAM

preemptible = false

image_type = "ubuntu_containerd"

machine_type = "custom-14-41984"

local_ssd_count = 0

disk_size_gb = 50

disk_type = "pd-standard"

tags = ["gke-node", "${var.cluster_name}-data"]

metadata = {

disable-legacy-endpoints = "true"

}

}

}

# Separately Managed Coordinator Pool

resource "google_container_node_pool" "coord-pool" {

name = "coord-pool"

location = var.zone

cluster = google_container_cluster.primary.name

node_count = 1

autoscaling {

min_node_count = 1

max_node_count = 2

}

management {

auto_repair = true

auto_upgrade = false

}

node_config {

oauth_scopes = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/devstorage.read_only",

]

labels = {

es_type = "coordinator_nodes"

}

# 6 CPUs, 22GB of RAM

preemptible = false

image_type = "ubuntu_containerd"

machine_type = "custom-6-22528"

local_ssd_count = 0

disk_size_gb = 50

disk_type = "pd-standard"

tags = ["gke-node", "${var.cluster_name}-coord"]

metadata = {

disable-legacy-endpoints = "true"

}

}

}

# Separately Managed Kibana Pool

resource "google_container_node_pool" "kibana-pool" {

name = "kibana-pool"

location = var.zone

cluster = google_container_cluster.primary.name

node_count = 1

autoscaling {

min_node_count = 1

max_node_count = 2

}

management {

auto_repair = true

auto_upgrade = false

}

node_config {

oauth_scopes = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

"https://www.googleapis.com/auth/devstorage.read_only",

]

labels = {

es_type = "kibana_nodes"

}

# 4 CPUs, 13GB of RAM

preemptible = false

image_type = "ubuntu_containerd"

machine_type = "custom-4-13312"

local_ssd_count = 0

disk_size_gb = 50

disk_type = "pd-standard"

tags = ["gke-node", "${var.cluster_name}-kibana"]

metadata = {

disable-legacy-endpoints = "true"

}

}

}

output "kubernetes_cluster_name" {

value = google_container_cluster.primary.name

description = "GKE Cluster Name"

}

And the content of the file vpc.tf

variable "project_id" {

description = "project id"

}

variable "region" {

description = "region"

}

provider "google" {

project = var.project_id

region = var.region

}

# VPC

resource "google_compute_network" "vpc-gke" {

name = "${var.cluster_name}-vpc"

auto_create_subnetworks = "false"

}

# Subnet

resource "google_compute_subnetwork" "subnet" {

name = "${var.cluster_name}-subnet"

region = var.region

network = google_compute_network.vpc-gke.name

ip_cidr_range = "170.35.0.0/24"

}

#Peering between OLD VMs vpc and GKE K8s vpc

resource "google_compute_network_peering" "to-vms-vpc" {

name = "to-vms-vpc-vpc-network"

network = google_compute_network.vpc-gke.id

peer_network = "projects/sigma-scheduler-297405/global/networks/vms-vpc-network"

}

resource "google_compute_network_peering" "to-gke-cluster" {

name = "to-gke-cluster-vpc-network"

network = "projects/sigma-scheduler-297405/global/networks/vms-vpc-network"

peer_network = google_compute_network.vpc-gke.id

}

output "region" {

value = var.region

description = "region"

}

#Enable communication from GKE pods to external instances, networks and services outside the Cluster.

resource "google_compute_firewall" "gke-cluster-to-all-vms-on-network" {

name = "gke-cluster-k8s-to-all-vms-on-network"

network = google_compute_network.vpc-portal.id

allow {

protocol = "tcp"

}

allow {

protocol = "udp"

}

allow {

protocol = "icmp"

}

allow {

protocol = "esp"

}

allow {

protocol = "ah"

}

allow {

protocol = "sctp"

}

source_ranges = ["10.96.0.0/14"]

}Let's deploy this GKE Cluster with Terraform!

Deploy a whole cluster is quite easy:

$ git clone https://github.com/calvarado2004/terraform-gke.git $ git checkout resize-to-small Switched to branch 'resize-to-small' Your branch is up to date with 'origin/resize-to-small'. $ terraform init Initializing the backend... Initializing provider plugins... - Finding latest version of hashicorp/google... - Installing hashicorp/google v3.49.0... - Installed hashicorp/google v3.49.0 (signed by HashiCorp) The following providers do not have any version constraints in configuration, so the latest version was installed. To prevent automatic upgrades to new major versions that may contain breaking changes, we recommend adding version constraints in a required_providers block in your configuration, with the constraint strings suggested below. * hashicorp/google: version = "~> 3.49.0" Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary. $ terraform plan -out=gke-cluster.plan $ terraform apply "gke-cluster.plan"